Generative AI become a powerful tool to creating diverse content, from text to images and even music. While the technology brings exciting possibilities, it also raises important concerns regarding intellectual property rights. In this article, we’ll explain how to block AI Crawlers like GPTBot from OpenAI by using robots.txt.

Why Block AI Crawlers?

Generative AI models like OpenAI are trained by crawling websites to improve the models which can potentially lead to unauthorized reproduction of copyrighted material. However, laws governing AI-generated content are still unclear, leaving creators vulnerable to unauthorized use of their work.

Blocking AI crawlers like GPTBot can be used to protect your intellectual property, privacy, and brand integrity, as well as optimize server load.

To disallow GPTBot to access the website you can add the GPTBot to your site’s robots.txt file.

Blocking AI Crawlers like GPTBot with robots.txt

To prevent GPTBot from crawling the website, add a simple directive to the robots.txt file. This file, located at the root of your website, tells web crawlers which parts of your site they are allowed or not-allow to access.

Robots.txt functions like a ‘no entry’ sign, guiding bots on how to crawl a website. Normally, search engine crawlers naturally index a site automatically. Without a ‘no entry’ sign, the bot will crawl everything.

First, you need to identify the AI crawlers (User-agent’s name) that you want to block by examining your server logs, analytics tool, or the official website of that AI. For the ChatGPT User-agent, it is GPTBot.

Once you have identified the AI crawlers, you need to add them to the robots.txt file, use the following directive:

User-agent: GPTBot

Disallow: /This directive will tell the OpenAI “GPTBot” not to crawl any pages on your site.

Where to Edit the Robots.txt file?

You can upload edited file of robots.txt manually by upload to /public_html folder of your website.

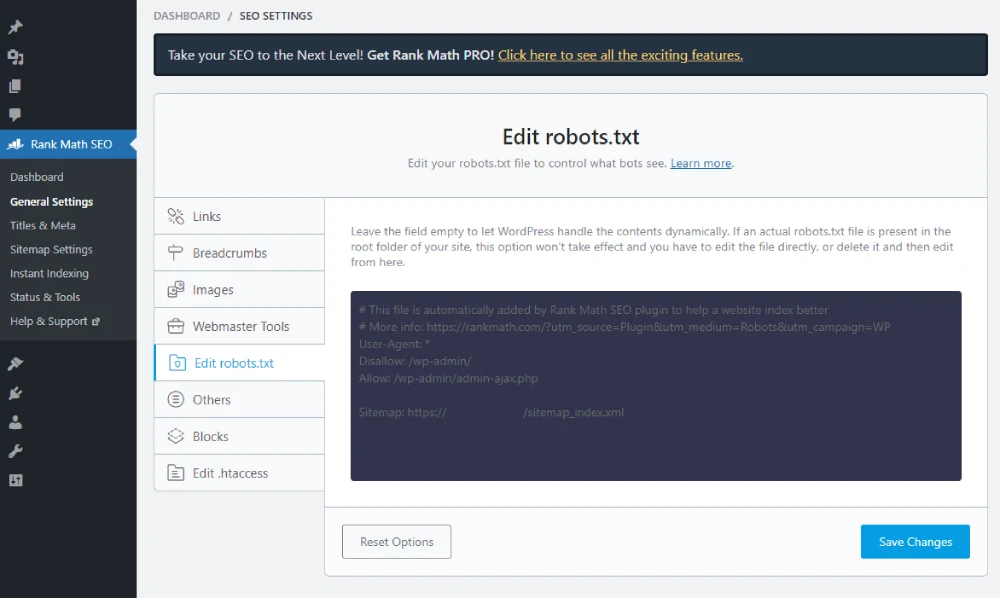

In case you don’t want to mess with the backend, for WordPress website you can use third-party SEO tools like Yoast SEO and RankMath to edit the robots.txt file from your dashboard.

Yoast SEO go to Tools > File Editor > Create robots.txt file > add your directive to block AI Crawler like GPTBot and CCBot.

RankMath go to General Settings > Edit robots.txt file > add your directive to block AI Crawler like GPTBot and CCBot.

By doing so, GPTBot, CCBot, or any other bot will no longer crawl your site and use it as material for their AI-generated content.

However, Robots.txt is not an infallible way to block AI crawlers. It is a set of guidelines that only good crawlers follow. Bad crawlers can ignore and disrespect the robots.txt rules.